From Kitchen to AI: A Task-based Metric for Measuring Trust

Robert Porzel, University Bremen.

Trust is an important factor in human-centric artificial intelligence – especially for the success and effectiveness of a collaborative task in which the participants rely on each other to achieve specific sub-goals. For example, in household environments, such as a kitchen, mistakes can be made by either party that could not only lead to failure to complete the task, but even to injury through various hot or sharp appliances. Trust in a new system or technology is critical to its success, since people tend to employ systems that they trust, and reject systems that they do not trust.

In the last few years, artificial agents, such as vacuuming robots, have become more common in household environments, and assistants for more complex tasks as cooking or cleaning are being developed. To ensure that these new systems will be accepted, it is important to explore how much people trust an autonomous system to handle these tasks, how this trust changes during use and what factors lead to an increase or decrease in trust. Toward that goal, it is important to find applicable measures for trust. For this, we propose measuring a user's trust in an artificial collaborator during cooperative cooking tasks by analysing the tasks delegated to the artificial partner during collaborative execution of a recipe.

As the delegation of tasks among humans relies on trust, we propose that the tasks given to the artificial collaborator, e.g. while preparing a meal, can supply information on the level of trust the human has in them. If the human assigns intricate, dangerous or important tasks to the artificial agent, e.g. heating or cutting an ingredient, this would indicate, that they trust this partner to complete the task successfully. Should they only delegate minor tasks to the robot – for example, wiping the counter – it indicates, that the robotic partner is only trusted to fulfil simple tasks where errors could easily mitigated. Toward the goal of measuring trust based on task delegation, three different aspects of a task that could influence a human’s tendency to delegate it were chosen in our approach: difficulty, risk and possibility for error mitigation. In addition, it was deemed relevant if a human would supervise the artificial collaborator during a task or even intervene. In addition discount factors were considered that might convince a human to assign a task to a robot even though they do not completely trust the robot, e.g. tediousness of a task or inability to complete a task themselves. These aspects were combined into a basis for a scale, that can be used to determine the level of trust the human put into the artificial partner when delegating this specific task to them.

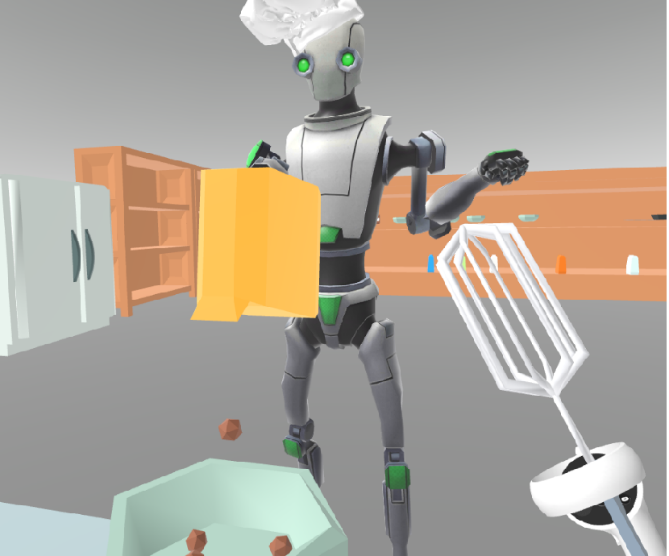

To observe humans during cooperative cooking with an artificial partner, a VR application was developed in the Unity game engine for use with an Oculus Quest HMD. In this application the user is placed in a kitchen environment together with a virtual robot. The user can interact with various objects in the kitchen by grabbing them with either their hands or the controllers and then complete various cooking tasks by moving them in appropriate ways -- e.g., moving a whisk in circular motions through a bowl containing the different ingredients to be mixed. In addition, the user can order the robot to fulfil any of the needed cooking tasks for recipe completion -- e.g., portioning a certain amount of an ingredient into a bowl -- or some supporting such as cleaning, tidying or fetching objects for the user. For these orders a delegation-type interface is used, where the user orders the robot to fulfil a task in a declarative manner, but is not required to give details on how the task should be completed.

In the future this metric could be part of a bigger set of measures for trust specific to cooperative tasks, that includes other aspects such as the phrasing of orders given to the robot. Similarly, it could be modified for further household tasks, that could in the future be assigned to household robots. Predictions made by a graphical model based on these metrics could also be used to adjust robot behavior at runtime to calibrate trust to the appropriate level for optimal cooperation. The described test environment and scale could be used in the future to explore different robot appearances and behaviors and how they affect trust, as well as trust development over time when the human can observe the robot complete tasks successfully or make errors.

More Articles

Can Robots Cook? Culinary challenges for advancing artificial intelligence

Anaphora Unveiled: Tracking Culinary Transformation in the Tech-Driven Kitchen

Narrative Objects

Deep Understanding of Everyday Activity Commands

Curiosity-Driven Exploration of Pouring Liquids

Toward a formal theory of narratives